Digital Puppeteers: How Algorithms Muzzle Free Will and Democracy

Société | Les individus

Written for session 29 – Algorithms and free will, what is the future of our freedom?

In the age of digital revolution, algorithms have emerged as the invisible puppeteers, subtly shaping our perceptions, choices, and ultimately, our freedom. While they offer unprecedented convenience, their potential for misuse raises concerns about manipulation, dependency, and the erosion of free will. This article delves into the intricate relationship between algorithms, free will, and democracy, shedding light on their impact and exploring real-world examples. Algorithms wield the power to manipulate users’ emotions, exploit vulnerabilities, limit exposure to new ideas, and create echo chambers. Through carefully crafted content, algorithms can evoke specific emotions, influencing decision-making processes. They can exploit users’ insecurities and loneliness to sell products or services that may not be in their best interests. By tailoring content to users’ preferences, algorithms can stifle diverse perspectives and hinder informed decisionmaking. The result is an increasingly polarized society trapped within echo chambers.

Political events have not been immune to the influence of algorithms. Countries such as Russia, the United States, Brazil, India, and the United Kingdom have witnessed the deployment of computational propaganda and cyber troops to manipulate public opinion and influence election outcomes. These tactics exploit the vulnerabilities of algorithms on social media platforms, further highlighting the potential threat they pose to democratic processes.

Unveiling Algorithmic Manipulation of Public Opinion in Indonesia

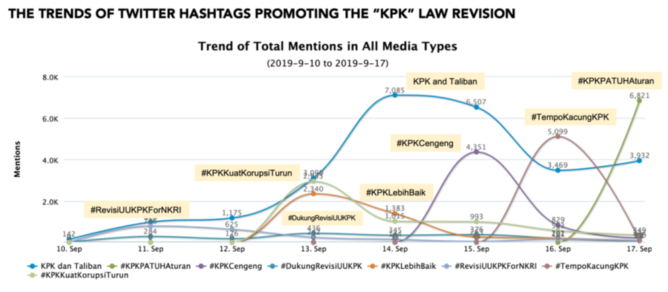

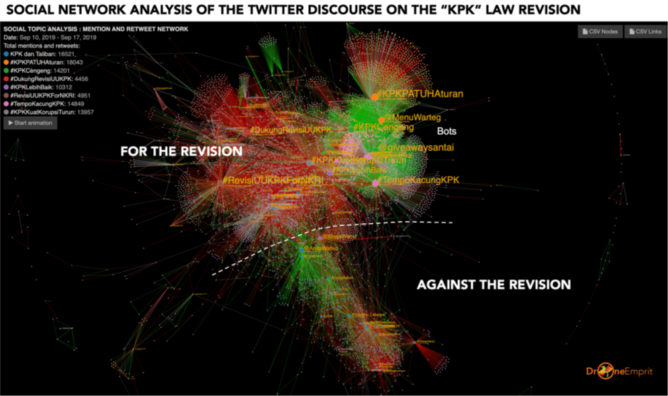

One platform that has delved into the realm of social media analytics is Drone Emprit. Founded in 2014, Drone Emprit tracks political discourse on social media in Indonesia. A collaborative research project on “Cyber Troops and Public Opinion Manipulation in Indonesia” in 2022 funded by ANGIN-KNAW, co-led by Yatun Sastramidjaja and Wijayanto with Ismail Fahmi (Drone Emprit) and Ward Berenschot (KITLV, University of Amsterdam) has uncovered a significant increase in the use of cyber troops, sophisticated techniques, and disinformation campaigns aimed at manipulating public opinion in the country. This phenomenon poses a serious threat to democracy, as it undermines free and fair elections, silences dissenting voices, and facilitates authoritarian rule. Two critical case studies elucidate this issue in Indonesia. In the first case, the sudden and controversial revision of the Law on the Corruption Eradication Commission (KPK) sparked public outcry and digital protests in September 2019. The proponents of the revision countered this public sentiment by deploying cyber troops who leveraged Twitter’s algorithms to push narratives supporting the changes. Using bots for message amplification, hashtags for activity coordination (Figure 1), and coordinated attacks to suppress dissent, they created a false impression of public support for the KPK law revision (Figure 2).

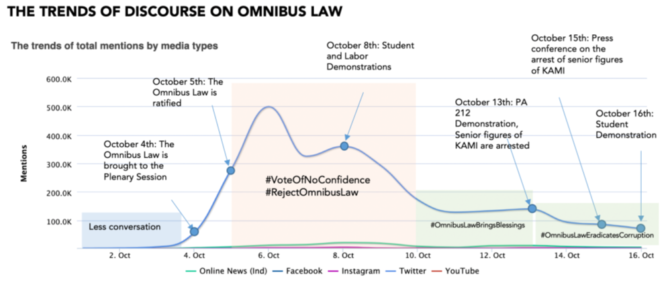

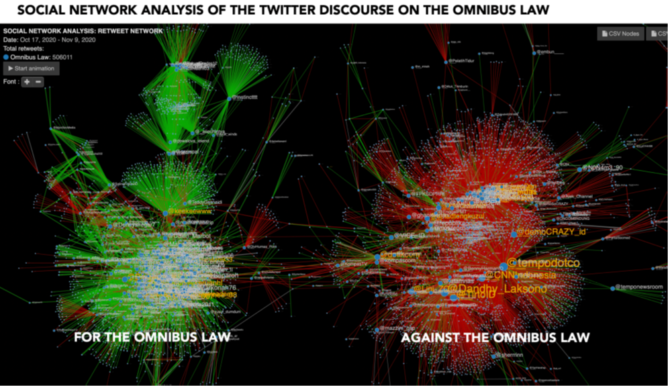

The second case revolves around the Omnibus Law on Job Creation, a legislation perceived as favoring corporate interests over workers and indigenous peoples. Online opposition to the law, primarily driven by younger netizens, was strong until the pro-law narratives began to dominate unexpectedly (Figure 3). Investigation traced this sudden shift to the government’s strategic online counter-measures, including cyber surveillance, counter-narratives, and targeted discrediting of activists.This incident marked an unprecedented use of digital resources to suppress opposition.

These case studies exemplify the burgeoning role of algorithms in shaping public opinion and political narratives. The puppeteers of the digital age are increasingly using algorithmic strings to manipulate our choices, emotions, and exposure to ideas.

Seeking Proactive Strategies in the Battle Against Fake News in Indonesia

Yet, can these puppet masters be manipulated for public good? Fact-checking organizations like MAFINDO (Masyarakat Anti Fitnah Indonesia, or the Indonesian Anti-Slander Society) believe that algorithms could be harnessed to automatically detect and suppress disinformation. However, social media platforms, including Facebook, seem reluctant to adopt such measures, primarily due to their profitable engagement-centric model. Fact-checking, a traditionally slow and reactive method, is predominantly utilized in Indonesia. Despite these efforts, many within the academic and media community believe that by the time a rumor requires debunking, it’s already late.

Navigating the Future of Free Will and Public Opinion

Stepping into the future, the question is, how will these algorithms influence democracy and human free will? Given the persuasive nature of algorithms, there is a very real risk of people being nudged into choices and opinions that are not their own – a direct assault on free will. Furthermore, the potency of computational propaganda in manipulating public opinion could distort democratic processes. However, it’s not all bleak. Awareness about the manipulative potential of these algorithms is growing, and so is the push for regulation. At the same time, more sophisticated tools are being developed to counter misinformation and manipulation. For instance, AI can be employed to automatically identify and counteract false information more effectively than traditional fact-checking. It’s crucial to strike a balance where algorithms serve us, not manipulate us. As the invisible puppet masters of the digital world, algorithms need to be put in their place – as tools serving humans, not the other way around.

Ismail Fahmi, Ph.D.

Founder of Drone Emprit and Media Kernels Indonesia